Aspiring filmmakers are quite lucky

compared to years ago. Today, you can make a movie in just about any format

and still be taken seriously, assuming that you have a great story and

reasonably good production values. As mentioned,

The Blair Witch

Project is one of the most successful independent features ever

made, yet it was shot with a consumer video camera (non-digital).

Prior to the digital revolution of the

1990s, things were a lot different. If the movie was shot on a format other

than 35mm, it did not stand a chance of being distributed. 16mm was not

taken seriously and video was a joke. These standards were so ingrained in

the industry, that even actors were reluctant to work on non-35mm shoots.

All that has changed now. Affordable,

high-quality digital cameras have democratized the industry. Still, 35mm

film is the standard by which all video formats are judged.

Has video reached the same quality level

as 35mm? Old school filmmakers say "no" because the image capturing ability

of 35mm is a "gazillion" times greater than video. Is this really the case?

Let's take a closer look. The truth may surprise you.

Note: the study below is based on

classic HD with 1080 lines of horizontal resolution. In 2007, the first

ultra HD camera was introduced featuring an amazing 4,520 lines. Keep that

in mind while reading!

Comparison

Their are two factors that can be compared:

color and

resolution. Most casual observers will agree that, assuming a

quality TV monitor, HD color is truly superb. To avoid a longwinded mathematical argument, let's

accept this at face value and focus

on comparing resolution, which is the real spoiler.

Resolution is the visible detail in an image.

Since pixels are the smallest point of information in the digital world,

it would seem that comparing pixel count is a good way to compare

relative resolution.

Film is analog so there are no real "pixels."

However, based on converted measures, a 35mm frame has 3 to 12 million pixels, depending on the

stock, lens, and shooting conditions. An HD frame has 2 million pixels, measured

using 1920 x 1080 scan lines. With this difference, 35mm appears vastly

superior to HD.

This is the argument most film purists use.

The truth is, pixels are not the way to compare resolution. The human

eye cannot see individual pixels beyond a short

distance. What we can see are lines.

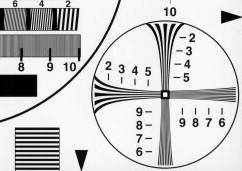

Consequently, manufacturers measure the sharpness

of photographic images and components using a parameter called

Modulation Transfer Function (MTF). This process uses

lines (not pixels) as a basis for comparison. Notice the lines in this

resolution chart:

Part of a Standard Resolution Chart

Since MTF is an industry standard, we will

maintain this standard for comparing HD with 35mm film. In other

words, we will make the comparison using lines rather than pixels. Scan

lines are the way video images are compared, so it makes sense from this

viewpoint, as well.

HD Resolution

As discussed previously,

standard

definition and high definition refer to the amount of scan lines in the

video image. Standard definition is 525 horizontal lines for NTSC and

625 lines for PAL.

Technically, anything that breaks the PAL barrier of 625

lines could be called high definition. The most common HD resolutions are 720p and 1080i

lines.

35mm

Resolution

There is an international study

on this issue, called Image Resolution of 35mm Film in

Theatrical Presentation. It was conducted by Hank Mahler (CBS, United States), Vittorio Baroncini (Fondazione

Ugo Bordoni, Italy), and Mattieu Sintas (CST, France).

In the study, MTF measurements

were used to determine the typical resolution of theatrical release

prints and answer prints in normal operation, utilizing existing

state-of-the-art 35mm film, processing, printing, and projection.

The prints were projected in six

movie theaters in various countries, and a panel of experts made the

assessments of the projected images using a well-defined formula.

The results are as follows:

|

35mm RESOLUTION |

|

Measurement |

Lines

|

|

Answer Print MTF |

1400 |

|

Release Print MTF |

1000 |

|

Theater Highest Assessment |

875 |

|

Theater Average Assessment |

750 |

Conclusion

As the study indicates, perceived differences

between HD and 35mm film are quickly disappearing. Notice I use the word "perceived."

This is important because we are not shooting a movie for laboratory

study, but rather for audiences.

At this point, the typical

audience cannot see the difference between HD and 35mm. Even

professionals have a hard time telling them apart. We go through

this

all the time at NYU ("Was this shot on film or video?").

Again, the study was based on

standard HD with 1080 lines of horizontal resolution. We now have

ultra HD with 4,520 lines.

Based on this, the debate is

moot. 16mm, 35mm, DV, and HD are all tools of the

filmmaker. The question is not which format is best, but rather, which

format is best for your project? The answer, of course, is based on a balance

between aesthetic and budgetary considerations.

One of

300 lessons found in Film School Online!

Cinematography Course

Topics

If you are

interested in learning more about the movies used

in this

lesson, click on the title or picture (courtesy 20th Century Fox,

MCA/Universal, Paramount, TCM, and Warner Brothers).

Copyright © Film School Online!

|